cuBALS使用(5)-cublasXt

cuBLAS的cuBLASXt API提供支持多GPU的主机接口:当使用该API时,应用程序仅需要在主机存储器空间上分配所需的矩阵。矩阵的大小没有限制,只要它们可以放入主机存储器即可。cuBLASXt API负责在指定的GPU之间分配内存,并在它们之间分派工作负载,最后将结果检索回主机。cuBLASXt API仅支持计算密集型BLAS 3例程(例如矩阵-矩阵运算),在这些例程中,来自GPU的PCI来回传输可以分摊。cuBLASXt API有自己的头文件cublasXt.h。

从8.0版开始,cuBLASXt API允许将任何矩阵放置在GPU设备上。

注意:cuBLASXt API仅在64位平台上受支持。

平铺设计方法

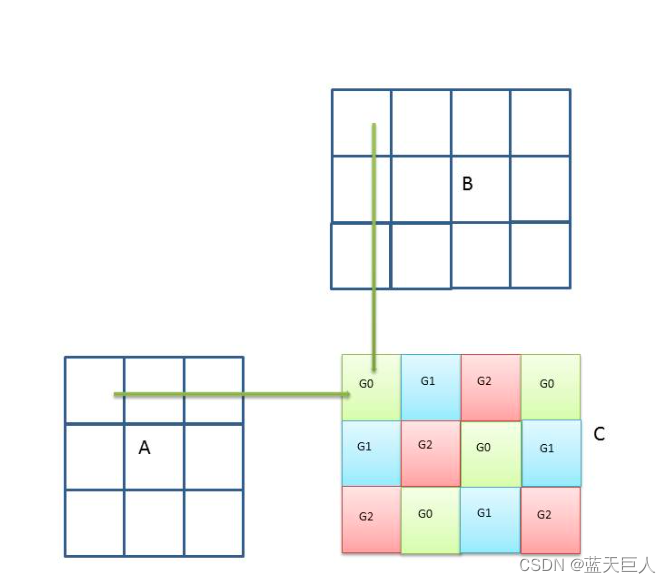

为了能够在多个GPU之间分担工作负载,cuBLASXt API使用了一种平铺策略:每个矩阵被分成用户可控尺寸BlockDim × BlockDim的正方形块。生成的矩阵平铺定义静态调度策略:每一所得瓦片以循环方式作用于GPU。每一GPU创建一个CPU线程,且所述CPU线程负责进行适当的存储器传送和CUBLAS操作以计算其所负责的所有瓦片。从性能的角度来看,由于这种静态调度策略,最好每个GPU的计算能力和PCI带宽都相同。下图说明了3个GPU之间的图块分布。为了从C计算第一瓦片G0,负责GPU0的CPU线程0必须以管线方式加载来自A的第一行的3个瓦片和来自B的第一列的瓦片,以便重叠存储器传送和计算,且在移动到下一瓦片G0之前将结果求和到C的第一瓦片G0中。

当块尺寸不是C的尺寸的精确倍数时,一些块在右边界或/和底部边界上被部分填充。当前的实现不会填充不完整的图块,而是通过执行正确的简化cuBLAS操作来跟踪这些不完整的图块:这样就不会进行额外的计算。然而,当所有GPU没有相同数量的不完整瓦片工作时,它仍然可以导致一些负载不平衡。

当一个或多个矩阵位于某些GPU设备上时,应用相同的平铺方法和工作负载共享。在这种情况下,存储器传输在设备之间进行。然而,当图块的计算和一些数据位于同一GPU设备上时,绕过将本地数据传输到图块或从本地数据传输到图块的存储器传输,并且GPU直接对本地数据进行操作。这可以显著提高性能,尤其是在仅使用一个GPU进行计算时。

矩阵可以位于任何GPU设备上,并且不必位于同一GPU设备上。此外,矩阵甚至可以位于不参与计算的GPU设备上。

与cuBLAS API相反,即使所有矩阵都位于同一设备上,从主机的角度来看,cuBLASXt API仍然是一个阻塞API:无论位于何处的数据结果在呼叫返回时都将是有效的,并且不需要设备同步。

Hybrid CPU-GPU computation

在出现非常大的问题时,cuBLASXt API可以将部分计算卸载到主机CPU。此功能可通过cublasXtSetCpuRoutine()和cublasXtSetCpuRatio()例程设置。影响CPU的工作负载被搁置:它仅仅是从底部和右侧取的所得矩阵的百分比,无论哪个维度较大。GPU平铺是在这之后在减少的结果矩阵上完成的。

如果任何矩阵位于GPU设备上,则将忽略该功能,并且所有计算都将仅在GPU上完成

应谨慎使用此功能,因为它可能会干扰负责为GPU提供数据的CPU线程。

目前,只有cublasXt

Results reproducibility

当前,给定工具包版本中的所有CUBLAS XT API例程在满足以下条件时生成相同的按位结果:

- 参与计算的所有GPU具有相同的计算能力和相同数量的SM。

- 在运行之间块尺寸保持相同。

- 或者不使用CPU混合计算,或者也保证所提供的CPUBlas产生可再现的结果。

cuBLASXt API数据类型

cublasXtHandle_ t

该 cublasXtHandle_t是指向保存cuBLASXt API上下文的不透明结构的指针类型。必须使用以下命令初始化cublasXtHandle_t 并且返回的句柄必须传递给所有后续的cuBLASXt API函数调用。上下文应在最后使用cublasXtDestroy() 。

cublasXtOpType_t

该cublasOptype_t枚举了四种可能得类型,此枚举用作里程的参数cublasXtSetCpuRotine和cublasXtSetCpuRation建立对应额混合的配置。

| Value | Meaning |

|---|---|

|

| 浮点或单精度类型 |

|

| 双精度类型 |

|

| 单精度复数 |

|

| 双精度复数 |

cublasXtBlasOp_t

该 cublasXtBlasOp_t 类型列举了由cuBLASXt API支持的BLAS3或类BLAS3程序。此枚举用作例程的参数cublasXtSetCpuRoutine 以及 cublasXtSetCpuRoutine 以建立混合配

cublasXtSetCpuRoutine

| Value | Meaning |

|---|---|

|

| GEMM routine |

|

| SYRK routine |

|

| HERK routine |

|

| SYMM routine |

|

| HEMM routine |

|

| TRSM routine |

|

| SYR2K routine |

|

| HER2K routine |

|

| SPMM routine |

|

| SYRKX routine |

|

| HERKX routine |

cublasXtPinningMemMode_t

该类型用于通过例程启用或禁用固定存储器模式cubasMgSetPinningMemMode

| Value | Meaning |

|---|---|

|

| the Pinning Memory mode is disabled |

|

| the Pinning Memory mode is enabled |

cuBLASXt API Helper Function Reference

cublasXtCreate()

cublasStatus_t

cublasXtCreate(cublasXtHandle_t *handle)此函数用于初始化cuBLASXt API,并为保存cuBLASXt API上下文的不透明结构创建句柄。它分配主机和设备上的硬件资源,必须在进行任何其他cuBLASXt API调用之前调用。

| Return Value | Meaning |

|---|---|

|

| the initialization succeeded |

|

| the resources could not be allocated |

|

| cuBLASXt API is only supported on 64-bit platform |

cublasXtDestroy()

cublasStatus_t

cublasXtDestroy(cublasXtHandle_t handle)此函数用于释放cuBLASXt API上下文使用的硬件资源。GPU资源的释放可以被延迟直到应用退出。此函数通常是对cuBLASXt API的最后一次调用,具有特定句柄。

| Return Value | Meaning |

|---|---|

|

| the shut down succeeded |

|

| the library was not initialized |

cublasXtDeviceSelect()

cublasXtDeviceSelect(cublasXtHandle_t handle, int nbDevices, int deviceId[])此函数允许用户提供将参与后续cuBLASXt API数学函数调用的GPU设备数量及其各自的ID。此函数将为列表中提供的每个GPU创建一个cuBLAS上下文。当前设备配置是静态的,不能在Math函数调用之间更改。在这方面,此函数应仅在cublasXtCreate之后调用一次。为了能够运行多个配置,应创建多个cuBLASXt API上下文。

| Return Value | Meaning |

|---|---|

|

| User call was sucessful |

|

| Access to at least one of the device could not be done or a cuBLAS context could not be created on at least one of the device |

|

| Some resources could not be allocated. |

cublasXtSetBlockDim()

cublasXtSetBlockDim(cublasXtHandle_t handle, int blockDim)此函数允许用户设置用于后续Math函数调用的矩阵平铺的块维数。矩阵拆分为blockDim x blockDim维度的正方形块。此函数可随时调用,并将对以下Math函数调用生效。块尺寸的选择应优化数学运算,并确保PCI传输与计算良好重叠。

| Return Value | Meaning |

|---|---|

|

| the call has been successful |

|

| blockDim <= 0 |

cublasXtGetBlockDim()

cublasXtGetBlockDim(cublasXtHandle_t handle, int *blockDim)此函数允许用户查询用于矩阵平铺的块尺寸。

| Return Value | Meaning |

|---|---|

|

| the call has been successful |

cublasXtSetCpuRoutine()

cublasXtSetCpuRoutine(cublasXtHandle_t handle, cublasXtBlasOp_t blasOp, cublasXtOpType_t type, void *blasFunctor)此函数允许用户提供相应BLAS例程的CPU实现。此函数可与cublasXtSetCpuRatio()函数一起使用,以定义CPU和GPU之间的混合计算。目前,仅xGEMM例程支持混合功能。

| Return Value | Meaning |

|---|---|

|

| the call has been successful |

|

| blasOp or type define an invalid combination |

|

| CPU-GPU Hybridization for that routine is not supported |

cublasXtSetCpuRatio()

cublasXtSetCpuRatio(cublasXtHandle_t handle, cublasXtBlasOp_t blasOp, cublasXtOpType_t type, float ratio )此函数允许用户定义在混合计算环境中应在CPU上完成的工作负载百分比。此函数可与cublasXtSetCpuRoutine()函数一起使用,以定义CPU和GPU之间的混合计算。目前,仅xGEMM例程支持混合功能。

| Return Value | Meaning |

|---|---|

|

| the call has been successful |

|

| blasOp or type define an invalid combination |

|

| CPU-GPU Hybridization for that routine is not supported |

cublasXtSetPinningMemMode()

cublasXtSetPinningMemMode(cublasXtHandle_t handle, cublasXtPinningMemMode_t mode)此功能允许用户启用或禁用固定的储器模式。启用后,如果矩阵尚未固定,则将分别使用CUDART例程cudaHostRegister和cudaHostUnregister固定/取消固定后续cuBLASXt API调用中传递的矩阵。如果矩阵碰巧被部分固定,则它也不会被固定。固定内存可提高PCI传输性能,并允许PCI内存传输与计算重叠。然而,固定/取消固定内存需要一些时间,这可能不会摊销。建议用户使用cudaMallocHost或cudaHostRegister自行固定存储器,并在计算序列完成时将其解锁。默认情况下,“固定内存”模式处于禁用状态。

当用于不同cuBLASXt API调用的矩阵重叠时,不应启用固定内存模式。如果使用cudaHostGetFlags固定了矩阵的第一个地址,则cuBLASXt确定该矩阵是否固定,因此无法知道该矩阵是否已经部分固定。这在多线程应用程序中尤其如此,在多线程应用程序中,当另一个线程正在访问内存时,内存可能会部分或全部被固定或取消固定。

| Return Value | Meaning |

|---|---|

|

| the call has been successful |

|

| the mode value is different from |

cublasXtGetPinningMemMode()

cublasXtGetPinningMemMode(cublasXtHandle_t handle,cublasXtPinningMemMode_t *mode)

此功能允许用户查询引固定储器模式。默认情况下,“固定内存”模式处于禁用状态。

| Return Value | Meaning |

|---|---|

|

| the call has been successful |

cuBLASXt API Math Functions Reference

在本章中,我们将介绍cuBLASXt API支持的实际Linear Agebra例程。我们将

| | | Meaning |

|---|---|---|

|

| ‘s’ or ‘S’ | real single-precision |

|

| ‘d’ or ‘D’ | real double-precision |

|

| ‘c’ or ‘C’ | complex single-precision |

|

| ‘z’ or ‘Z’ | complex double-precision |

cublasXtgemm()

cublasStatus_t cublasXtSgemm(cublasXtHandle_t handle,cublasOperation_t transa, cublasOperation_t transb,size_t m, size_t n, size_t k,const float *alpha,const float *A, int lda,const float *B, int ldb,const float *beta,float *C, int ldc)

cublasStatus_t cublasXtDgemm(cublasXtHandle_t handle,cublasOperation_t transa, cublasOperation_t transb,int m, int n, int k,const double *alpha,const double *A, int lda,const double *B, int ldb,const double *beta,double *C, int ldc)

cublasStatus_t cublasXtCgemm(cublasXtHandle_t handle,cublasOperation_t transa, cublasOperation_t transb,int m, int n, int k,const cuComplex *alpha,const cuComplex *A, int lda,const cuComplex *B, int ldb,const cuComplex *beta,cuComplex *C, int ldc)

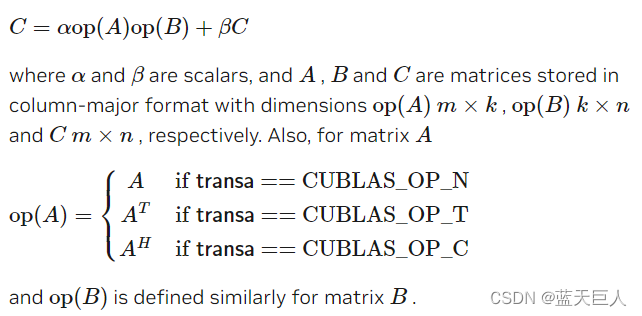

cublasStatus_t cublasXtZgemm(cublasXtHandle_t handle,cublasOperation_t transa, cublasOperation_t transb,int m, int n, int k,const cuDoubleComplex *alpha,const cuDoubleComplex *A, int lda,const cuDoubleComplex *B, int ldb,const cuDoubleComplex *beta,cuDoubleComplex *C, int ldc)此函数执行矩阵-矩阵乘法

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| transa | input | operation op( | |

| transb | input | operation op( | |

| m | input | number of rows of matrix op( | |

| n | input | number of columns of matrix op( | |

| k | input | number of columns of op( | |

| alpha | host | input | |

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store the matrix | |

| B | host or device | input |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix | |

| beta | host | input |

|

| C | host or device | in/out |

|

| ldc | input | leading dimension of a two-dimensional array used to store the matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

cublasXthemm()

cublasStatus_t cublasXtChemm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,size_t m, size_t n,const cuComplex *alpha,const cuComplex *A, size_t lda,const cuComplex *B, size_t ldb,const cuComplex *beta,cuComplex *C, size_t ldc)

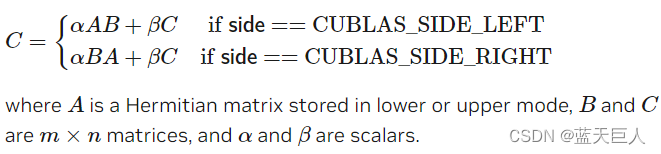

cublasStatus_t cublasXtZhemm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,size_t m, size_t n,const cuDoubleComplex *alpha,const cuDoubleComplex *A, size_t lda,const cuDoubleComplex *B, size_t ldb,const cuDoubleComplex *beta,cuDoubleComplex *C, size_t ldc)此函数执行厄米特矩阵-矩阵乘法

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| side | input | indicates if matrix | |

| uplo | input | indicates if matrix | |

| m | input | number of rows of matrix | |

| n | input | number of columns of matrix | |

| alpha | host | input | |

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix | |

| B | host or device | input |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix | |

| beta | host | input |

|

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

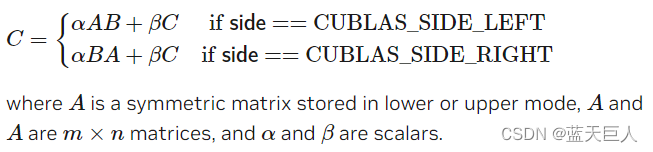

cublasXtsymm()

cublasStatus_t cublasXtSsymm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,size_t m, size_t n,const float *alpha,const float *A, size_t lda,const float *B, size_t ldb,const float *beta,float *C, size_t ldc)

cublasStatus_t cublasXtDsymm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,size_t m, size_t n,const double *alpha,const double *A, size_t lda,const double *B, size_t ldb,const double *beta,double *C, size_t ldc)

cublasStatus_t cublasXtCsymm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,size_t m, size_t n,const cuComplex *alpha,const cuComplex *A, size_t lda,const cuComplex *B, size_t ldb,const cuComplex *beta,cuComplex *C, size_t ldc)

cublasStatus_t cublasXtZsymm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,size_t m, size_t n,const cuDoubleComplex *alpha,const cuDoubleComplex *A, size_t lda,const cuDoubleComplex *B, size_t ldb,const cuDoubleComplex *beta,cuDoubleComplex *C, size_t ldc)此函数执行对称矩阵-矩阵乘法

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| side | input | indicates if matrix | |

| uplo | input | indicates if matrix | |

| m | input | number of rows of matrix | |

| n | input | number of columns of matrix | |

| alpha | host | input | |

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix | |

| B | host or device | input |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix | |

| beta | host | input |

|

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

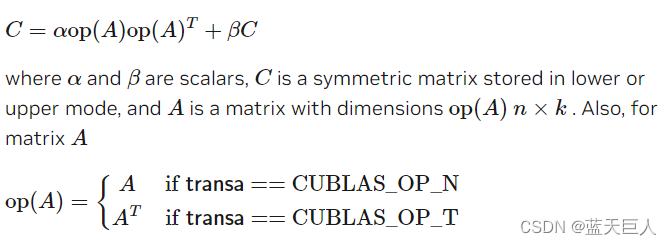

cublasXtsyrk()

cublasStatus_t cublasXtSsyrk(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,int n, int k,const float *alpha,const float *A, int lda,const float *beta,float *C, int ldc)

cublasStatus_t cublasXtDsyrk(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,int n, int k,const double *alpha,const double *A, int lda,const double *beta,double *C, int ldc)

cublasStatus_t cublasXtCsyrk(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,int n, int k,const cuComplex *alpha,const cuComplex *A, int lda,const cuComplex *beta,cuComplex *C, int ldc)

cublasStatus_t cublasXtZsyrk(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,int n, int k,const cuDoubleComplex *alpha,const cuDoubleComplex *A, int lda,const cuDoubleComplex *beta,cuDoubleComplex *C, int ldc)此函数执行对称秩- K

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| uplo | input | indicates if matrix | |

| trans | input | operation op( | |

| n | input | number of rows of matrix op( | |

| k | input | number of columns of matrix op( | |

| alpha | host | input | |

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix A. | |

| beta | host | input |

|

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

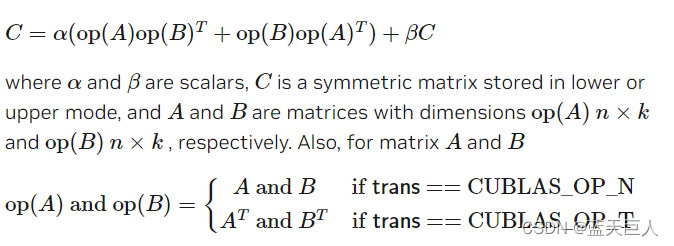

cublasXtsyr2k()

cublasStatus_t cublasXtSsyr2k(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const float *alpha,const float *A, size_t lda,const float *B, size_t ldb,const float *beta,float *C, size_t ldc)

cublasStatus_t cublasXtDsyr2k(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const double *alpha,const double *A, size_t lda,const double *B, size_t ldb,const double *beta,double *C, size_t ldc)

cublasStatus_t cublasXtCsyr2k(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const cuComplex *alpha,const cuComplex *A, size_t lda,const cuComplex *B, size_t ldb,const cuComplex *beta,cuComplex *C, size_t ldc)

cublasStatus_t cublasXtZsyr2k(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const cuDoubleComplex *alpha,const cuDoubleComplex *A, size_t lda,const cuDoubleComplex *B, size_t ldb,const cuDoubleComplex *beta,cuDoubleComplex *C, size_t ldc)This function performs the symmetric rank- 2 update

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| uplo | input | indicates if matrix | |

| trans | input | operation op( | |

| n | input | number of rows of matrix op( | |

| k | input | number of columns of matrix op( | |

| alpha | host | input | |

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix | |

| B | host or device | input |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix | |

| beta | host | input |

|

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

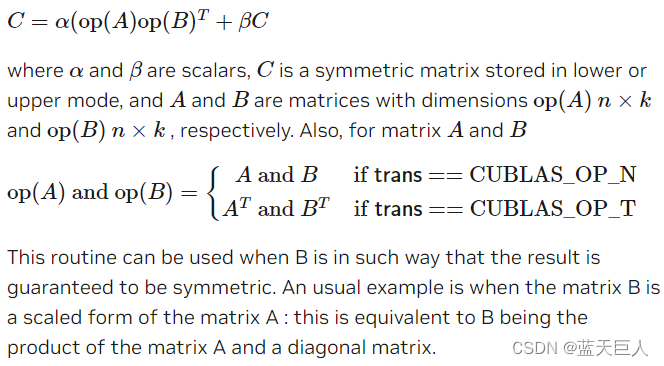

cublasXtsyrkx()

cublasStatus_t cublasXtSsyrkx(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const float *alpha,const float *A, size_t lda,const float *B, size_t ldb,const float *beta,float *C, size_t ldc)

cublasStatus_t cublasXtDsyrkx(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const double *alpha,const double *A, size_t lda,const double *B, size_t ldb,const double *beta,double *C, size_t ldc)

cublasStatus_t cublasXtCsyrkx(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const cuComplex *alpha,const cuComplex *A, size_t lda,const cuComplex *B, size_t ldb,const cuComplex *beta,cuComplex *C, size_t ldc)

cublasStatus_t cublasXtZsyrkx(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const cuDoubleComplex *alpha,const cuDoubleComplex *A, size_t lda,const cuDoubleComplex *B, size_t ldb,const cuDoubleComplex *beta,cuDoubleComplex *C, size_t ldc)This function performs a variation of the symmetric rank- k update

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| uplo | input | indicates if matrix | |

| trans | input | operation op( | |

| n | input | number of rows of matrix op( | |

| k | input | number of columns of matrix op( | |

| alpha | host | input | |

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix | |

| B | host or device | input |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix | |

| beta | host | input |

|

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

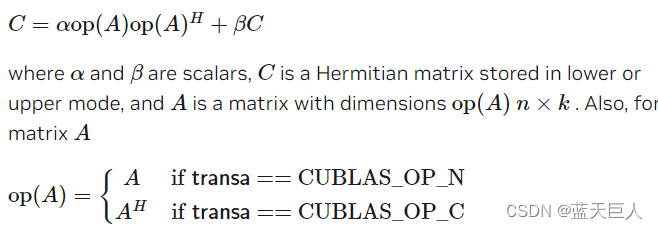

cublasXtherk()

cublasStatus_t cublasXtCherk(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,int n, int k,const float *alpha,const cuComplex *A, int lda,const float *beta,cuComplex *C, int ldc)

cublasStatus_t cublasXtZherk(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,int n, int k,const double *alpha,const cuDoubleComplex *A, int lda,const double *beta,cuDoubleComplex *C, int ldc)This function performs the Hermitian rank- K update

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| uplo | input | indicates if matrix | |

| trans | input | operation op( | |

| n | input | number of rows of matrix op( | |

| k | input | number of columns of matrix op( | |

| alpha | host | input | |

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix | |

| beta | host | input |

|

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

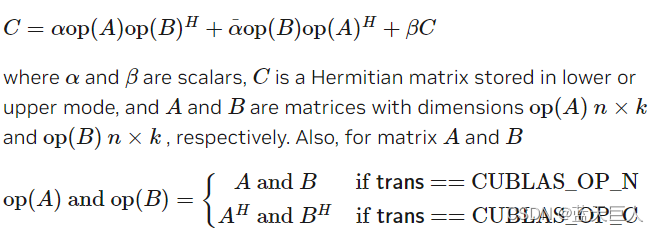

cublasXther2k()

cublasStatus_t cublasXtCher2k(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const cuComplex *alpha,const cuComplex *A, size_t lda,const cuComplex *B, size_t ldb,const float *beta,cuComplex *C, size_t ldc)

cublasStatus_t cublasXtZher2k(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const cuDoubleComplex *alpha,const cuDoubleComplex *A, size_t lda,const cuDoubleComplex *B, size_t ldb,const double *beta,cuDoubleComplex *C, size_t ldc)此函数执行埃尔米特秩- 2 更新

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| uplo | input | indicates if matrix | |

| trans | input | operation op( | |

| n | input | number of rows of matrix op( | |

| k | input | number of columns of matrix op( | |

| alpha | host | input | |

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix | |

| B | host or device | input |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix | |

| beta | host | input |

|

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

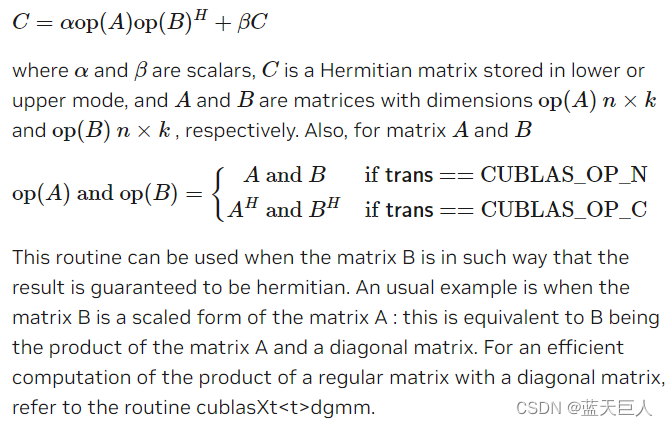

cublasXtherkx()

cublasStatus_t cublasXtCherkx(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const cuComplex *alpha,const cuComplex *A, size_t lda,const cuComplex *B, size_t ldb,const float *beta,cuComplex *C, size_t ldc)

cublasStatus_t cublasXtZherkx(cublasXtHandle_t handle,cublasFillMode_t uplo, cublasOperation_t trans,size_t n, size_t k,const cuDoubleComplex *alpha,const cuDoubleComplex *A, size_t lda,const cuDoubleComplex *B, size_t ldb,const double *beta,cuDoubleComplex *C, size_t ldc)这个函数执行埃尔米特秩-x更新

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| uplo | input | indicates if matrix | |

| trans | input | operation op( | |

| n | input | number of rows of matrix op( | |

| k | input | number of columns of matrix op( | |

| alpha | host | input | |

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix | |

| B | host or device | input |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix | |

| beta | host | input | real scalar used for multiplication, if |

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

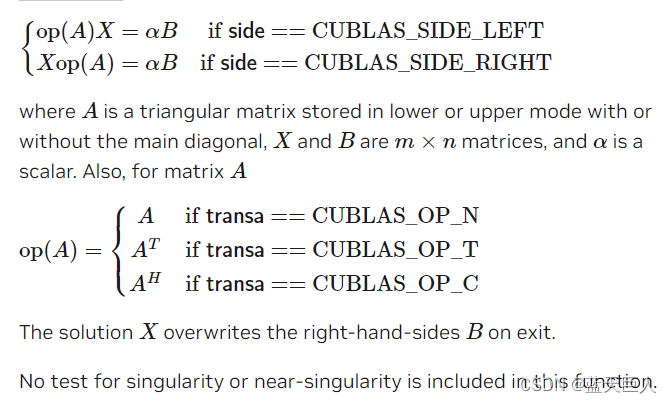

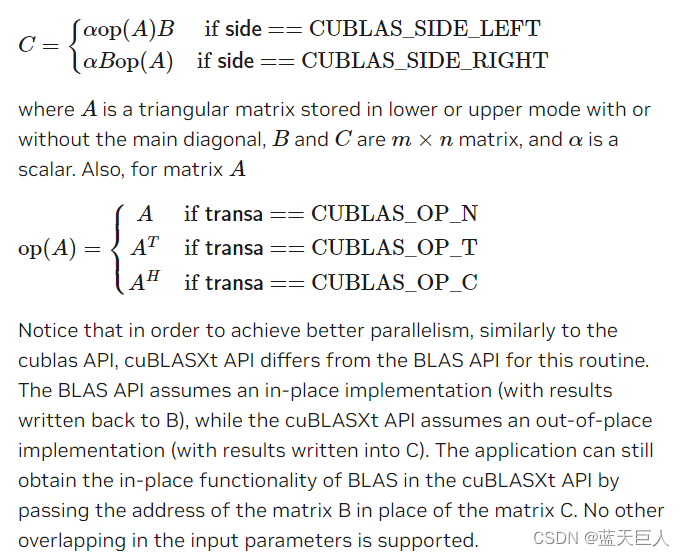

cublasXttrsm()

cublasStatus_t cublasXtStrsm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,cublasOperation_t trans, cublasXtDiagType_t diag,size_t m, size_t n,const float *alpha,const float *A, size_t lda,float *B, size_t ldb)

cublasStatus_t cublasXtDtrsm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,cublasOperation_t trans, cublasXtDiagType_t diag,size_t m, size_t n,const double *alpha,const double *A, size_t lda,double *B, size_t ldb)

cublasStatus_t cublasXtCtrsm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,cublasOperation_t trans, cublasXtDiagType_t diag,size_t m, size_t n,const cuComplex *alpha,const cuComplex *A, size_t lda,cuComplex *B, size_t ldb)

cublasStatus_t cublasXtZtrsm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,cublasOperation_t trans, cublasXtDiagType_t diag,size_t m, size_t n,const cuDoubleComplex *alpha,const cuDoubleComplex *A, size_t lda,cuDoubleComplex *B, size_t ldb)This function solves the triangular linear system with multiple right-hand-sides

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| side | input | indicates if matrix | |

| uplo | input | indicates if matrix | |

| trans | input | operation op( | |

| diag | input | indicates if the elements on the main diagonal of matrix | |

| m | input | number of rows of matrix | |

| n | input | number of columns of matrix | |

| alpha | host | input |

|

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix | |

| B | host or device | in/out |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

cublasXttrmm()

cublasStatus_t cublasXtStrmm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,cublasOperation_t trans, cublasDiagType_t diag,size_t m, size_t n,const float *alpha,const float *A, size_t lda,const float *B, size_t ldb,float *C, size_t ldc)

cublasStatus_t cublasXtDtrmm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,cublasOperation_t trans, cublasDiagType_t diag,size_t m, size_t n,const double *alpha,const double *A, size_t lda,const double *B, size_t ldb,double *C, size_t ldc)

cublasStatus_t cublasXtCtrmm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,cublasOperation_t trans, cublasDiagType_t diag,size_t m, size_t n,const cuComplex *alpha,const cuComplex *A, size_t lda,const cuComplex *B, size_t ldb,cuComplex *C, size_t ldc)

cublasStatus_t cublasXtZtrmm(cublasXtHandle_t handle,cublasSideMode_t side, cublasFillMode_t uplo,cublasOperation_t trans, cublasDiagType_t diag,size_t m, size_t n,const cuDoubleComplex *alpha,const cuDoubleComplex *A, size_t lda,const cuDoubleComplex *B, size_t ldb,cuDoubleComplex *C, size_t ldc)此函数执行三角矩阵-矩阵乘法

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| side | input | indicates if matrix | |

| uplo | input | indicates if matrix | |

| trans | input | operation op( | |

| diag | input | indicates if the elements on the main diagonal of matrix | |

| m | input | number of rows of matrix | |

| n | input | number of columns of matrix | |

| alpha | host | input |

|

| A | host or device | input |

|

| lda | input | leading dimension of two-dimensional array used to store matrix | |

| B | host or device | input |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix | |

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the function failed to launch on the GPU |

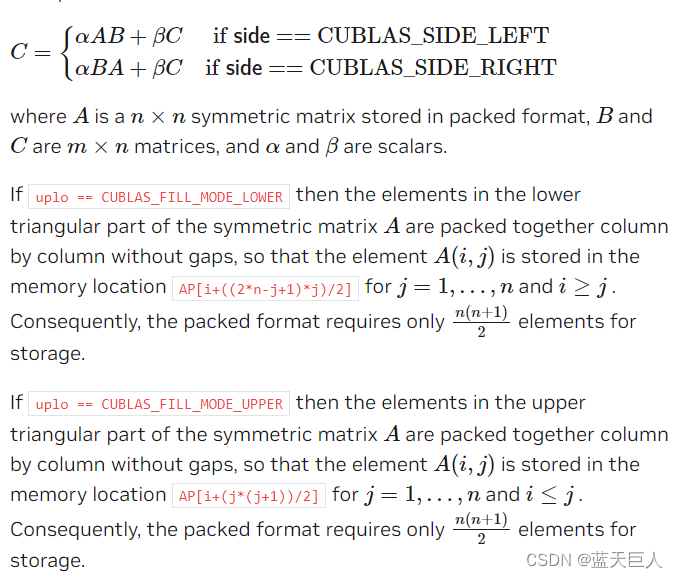

cublasXtspmm()

cublasStatus_t cublasXtSspmm( cublasXtHandle_t handle,cublasSideMode_t side,cublasFillMode_t uplo,size_t m,size_t n,const float *alpha,const float *AP,const float *B,size_t ldb,const float *beta,float *C,size_t ldc );cublasStatus_t cublasXtDspmm( cublasXtHandle_t handle,cublasSideMode_t side,cublasFillMode_t uplo,size_t m,size_t n,const double *alpha,const double *AP,const double *B,size_t ldb,const double *beta,double *C,size_t ldc );cublasStatus_t cublasXtCspmm( cublasXtHandle_t handle,cublasSideMode_t side,cublasFillMode_t uplo,size_t m,size_t n,const cuComplex *alpha,const cuComplex *AP,const cuComplex *B,size_t ldb,const cuComplex *beta,cuComplex *C,size_t ldc );cublasStatus_t cublasXtZspmm( cublasXtHandle_t handle,cublasSideMode_t side,cublasFillMode_t uplo,size_t m,size_t n,const cuDoubleComplex *alpha,const cuDoubleComplex *AP,const cuDoubleComplex *B,size_t ldb,const cuDoubleComplex *beta,cuDoubleComplex *C,size_t ldc );This function performs the symmetric packed matrix-matrix multiplication

| Param. | Memory | In/out | Meaning |

|---|---|---|---|

| handle | input | handle to the cuBLASXt API context. | |

| side | input | indicates if matrix | |

| uplo | input | indicates if matrix | |

| m | input | number of rows of matrix | |

| n | input | number of columns of matrix | |

| alpha | host | input | |

| AP | host | input | |

| B | host or device | input |

|

| ldb | input | leading dimension of two-dimensional array used to store matrix | |

| beta | host | input |

|

| C | host or device | in/out |

|

| ldc | input | leading dimension of two-dimensional array used to store matrix |

The possible error values returned by this function and their meanings are listed below.

| Error Value | Meaning |

|---|---|

|

| the operation completed successfully |

|

| the library was not initialized |

|

| the parameters |

|

| the matrix AP is located on a GPU device |

|

| the function failed to launch on the GPU |